Bring expert knowledge and experience anywhere, anytime through accessible, low-cost, but precise and efficient teleguidance of ultrasound examinations.

We are a research sub-group of the Robotics and Control Lab of the University of British Columbia, where we are developing a novel technology to make expert ultrasound available to remote and Indigenous communities through mixed reality "Human Teleoperation".

About

Bring expert healthcare to any location, any time through Mixed Reality Human Teleoperation.

Reduce travel, wait times, time away from work and family, CO2 emissions, and the cost of healthcare.

Operable with no prior experience, Human Teleop eliminates the need for complex, expensive, large robots

Many remote communities have poor access to healthcare, even in wealthy countries like Canada. As a result, they spend a large fraction of their annual healthcare budget on transporting patients to cities for treatment and diagnosis. While robotic telemedicine systems are often expensive and complex for such small communities, and video conferencing systems are inefficient and imprecise, we are developing a novel method called human teleoperation. Human teleoperation is a framework for tightly coupled guidance of a novice follower person by an expert sonographer through a remote mixed reality (MR) system. By combining high-speed communication, MR, and haptics we can achieve intuitive, fast, precise teleoperation of an ultrasound procedure with almost robot-like performance but human flexibility and intelligence. This can enable greatly improved access to healthcare in small or remote communities.

The Human Teleoperation system consists of two halves: the patient side and the expert side.

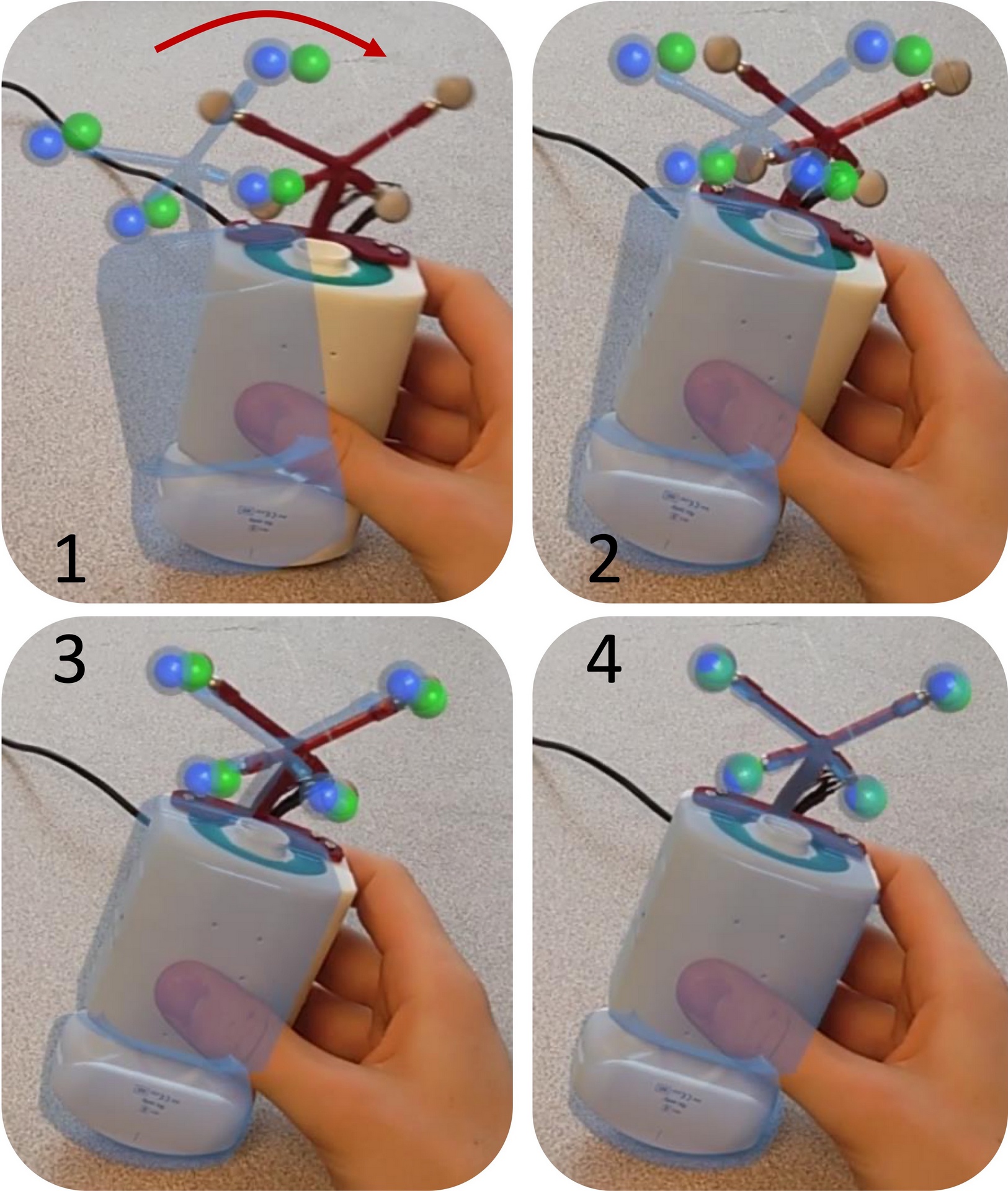

Patient Side: A novice person (who may be the patient him/herself) wears a mixed reality headset which shows them a 3D virtual ultrasound probe. Their task is simply to align the real probe to the virtual one and follow it as it moves across the patient.

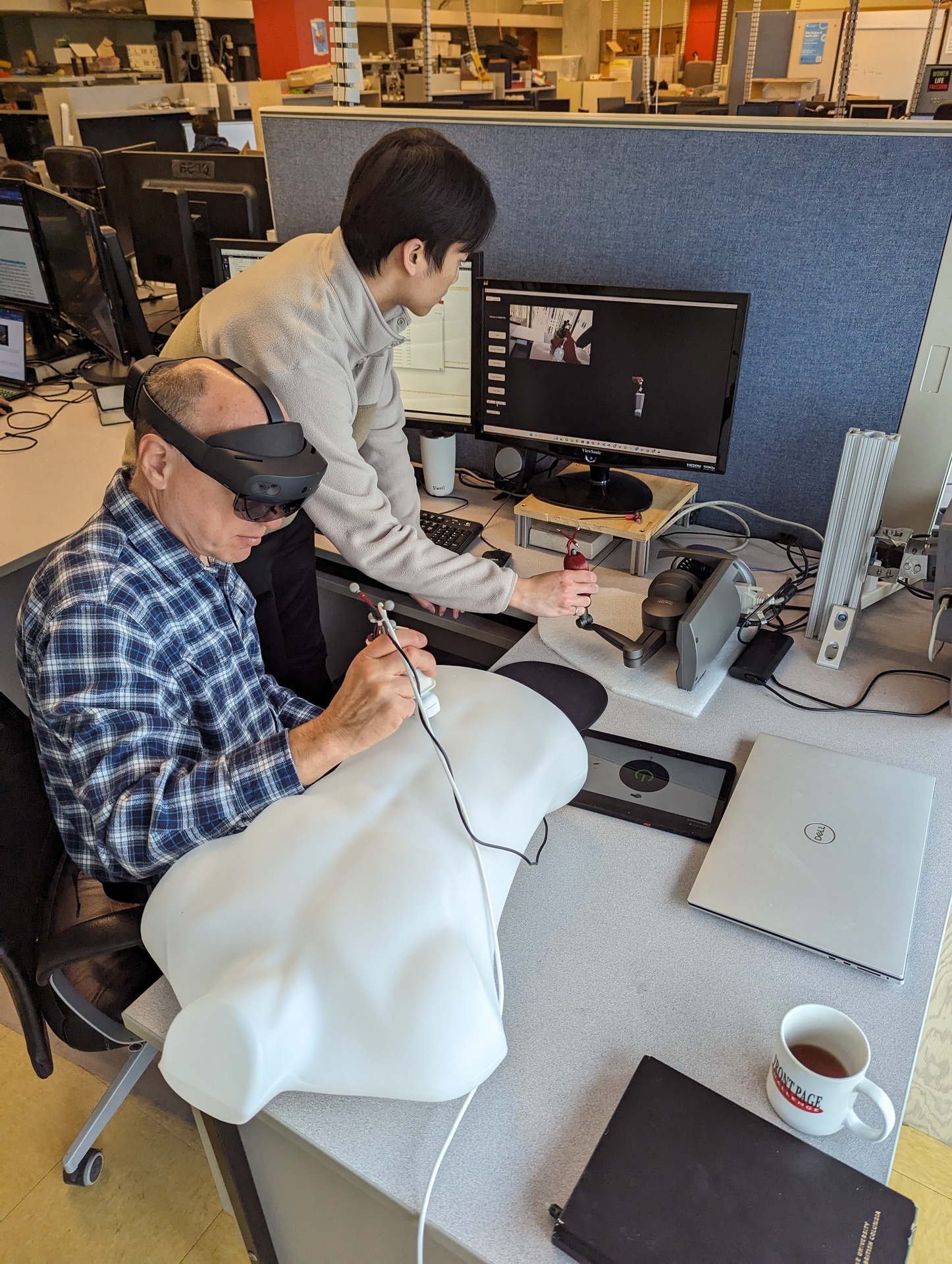

Expert Side: The expert sonographer, radiologist, or physician guides the procedure in a tightly-coupled, hand-over-hand manner by controlling the position and orientation of the virtual probe in real time. To do so, they manipulate an input device called a haptic device, shown in the image above.

Read about how these two sides connect and enable teleoperation in the next section about the technology.

In Human Teleoperation, the expert feels almost as if they are performing the procedure in-person, directly on the patient. This is called transparency, and it enables the expert to perform high-quality scans by teleporting their skillset to the remote location. To achieve transparency, the expert must see and feel as if they were by the patient's side.

Vision: Over a high-speed, secure, 5G-enabled communication system, the follower's point-of-view camera video is streamed to the expert, as is the ultrasound image. The follower video includes the virtual holograms so the expert can see exactly what they are doing, and the ultrasound interface allows the expert to adjust imaging settings and parameters directly as they would in person.

Haptics: During scans, sonographers focus exclusively on the ultrasound image and rely on their sense of touch to move the probe on the patient. Human Teleoperation mimics this remotely through haptic feedback. A force sensor on the ultrasound probe measures the novice's applied force, while the mixed reality headset captures an accurate 3D model of the patient and tracks the probe's position and orientation in real time. These measurements are combined intelligently to produce haptic feedback for the expert through their input device in a manner that is detailed, accurate, and robust to communication time delays.

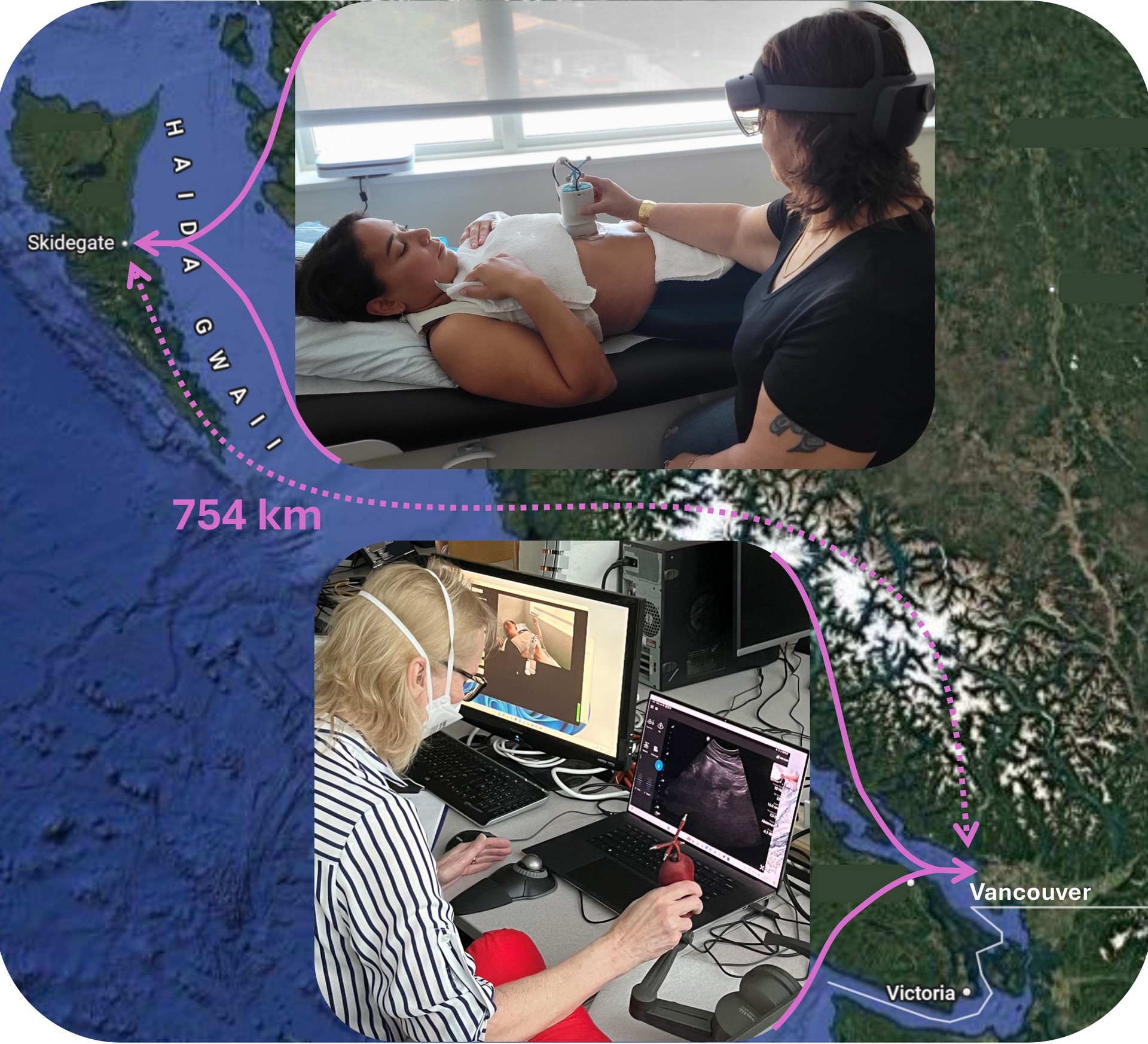

Throughout the development process, we have worked closely with two highly experienced sonographers, Jan Reid and Vickie Lessoway, as well as a radiologist, Dr. Silvia Chang, with expertise in abdominal ultrasound. Moreover, we have partnered and met with remote and Indigenous communities including Skidegate and Bella Bella. In this way, we have ensured that the system addresses a real need and is truly usable by all parties.

We have performed guided ultrasound scans of patients with the Human Teleoperation system in our lab, in hospitals in Vancouver, and in Skidegate. In Skidegate, situated 754km north of Vancouver in the Haida Gwaii islands, we performed 11 scans with 10 different novices, guided by sonographers at the University of British Columbia in Vancouver. None of the novices had any experience in ultrasound or mixed reality, yet all were able to perform the scan and acquire all 5 target images. Of the captured images, 93% were rated as clinically usable by two separate radiologists. NASA task load index questionnaires of all the novices and patients indicated the system was easy to use, quick to learn, and led to good performance.

Novel 6-axis, low-profile, low-cost ultrasound probe force sensors based on magnetic field readings

Methods for fast and accurate tracking of an ultrasound probe without added hardware

Physical and control-theoretic modeling and optimization of Human Teleoperation

Robust and realistic feedback through local visual and haptic models of the patient

Studying and optimizing how to guide a person's motions through mixed reality

Evaluating and improving the clinical performance and practicality of the system

Most of our work so far has been published as a patent or refereed paper in a journal or conference. Please see the full list with PDFs at the link below.

Research

For questions, potential partnerships, and community, hospital, technical, industrial, research, or other collaborations, please get in touch!